Hello Everyone! Today we will discussion about the one of most important and interesting activity which is play a vital role in data engineering area. If you are data engineer or you sit around data lover then you must heard about ETL terminology. If you did not hear then no need to worries about. In this article, we are going to discuss about it in detail.

Before knowing about the ETL process, we need to know why this activity became as necessity these days? And after ETL, what we can achieve? As we know that there are multiple applications we have and every application generating or consume the data but it does not necessary every application will provide you data as per your required format so in this case you have to put some extra effort to convert the data as per your desire.

ETL refers to Extraction, Transform and Load. ETL operations are often performed by fit-for-purpose tools that have been on the market for a long time, and sometimes by custom in-house programs.

Extracts data from homogeneous or heterogeneous data sources.

Transforms the data for storing it in proper format or structure for querying and analysis purpose.

Load it into the final target (database, more specifically, operational data store, data mart, or data warehouse)

Let’s discuss about Big data platform.

Here I am using three nodes of CentOS cluster and I deployed Hadoop with Apache Spark, Apache Hive, Apache Airflow and Jupyter Notebook.

As you can see in ETL high level architecture there are four phases; source, staging, transform and publish. Let discuss about these four phases.

1) Source: In this phase, we have flat files and this file we can get from any applications but in my case this is available on my local Linux machine and we are going to copy to table hdfs location.

2) Staging(RAW Layer database): In this phase, we are going to create table in hive and defining all columns data types as String.

3) Transform(Transform Layer database): In this phase, we are going to transform the data types of raw layer table as data dictionary. Data dictionary is defined by business so we need to transform the data types of table’s columns as data dictionary.

4) Publish: This is final database layer which will be available for the analyzing purposes.

All the above phase, we will create the workflow on Apache Airflow.

Below is the Apache airflow DAG code

Step 1: create table in raw database.

Step 2: transfer the datasets into the hdfs raw databases table

directory. you can get the code for this step in airflow etl.py file.

Step 3: create table into transform database as per data dictionary.

Step 4: pull the from raw database, type cast as per data dictionary and insert into transform database.

Step 5: create table into publish layer database.

Step 6: Insert all records from transform layer database to Publish layer database.

Data Validation:

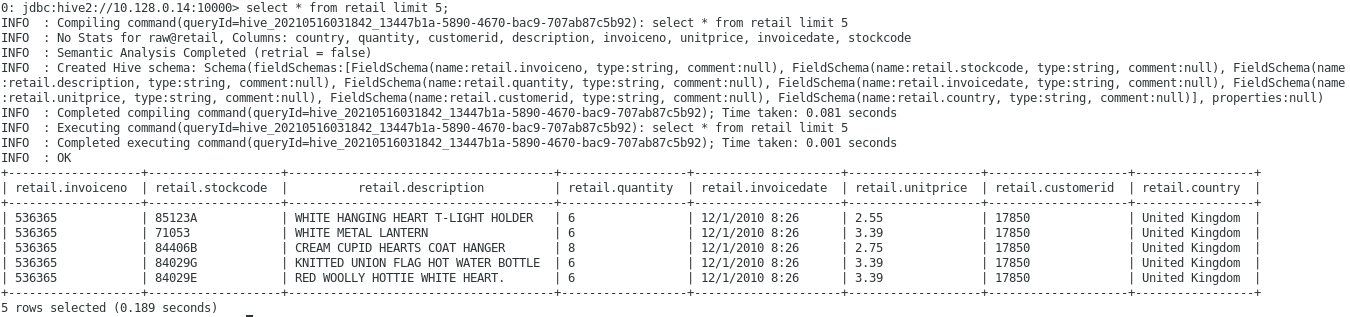

Raw Layer:

Transform Layer:

Publish Layer:

Finally we have successfully completed the fully automated ETL activity :) .

0 comments:

Post a Comment