Introduction

Ever asked an AI a question, only to get a vague or totally off-target response? You’re not alone. That’s where prompt engineering comes in—the skill of crafting clear, effective instructions so AI models like ChatGPT or GPT-5 deliver exactly what you need.

Think of it

like tuning a SQL query for performance, or giving precise directions to a

talented assistant. Good prompts save time, reduce frustration, and unlock

professional-grade results. Whether you're a developer, data analyst, marketer,

or just AI-curious, learning to communicate with AI is a game-changer.

Let’s break it down into 7 simple steps anyone can start using today.

Why Prompt

Engineering Matters Now ?

AI tools are

powerful, but they don’t read minds. Without clear guidance, even the smartest

model can miss the mark. Prompt engineering ensures you get useful, accurate,

and well-structured outputs—transforming AI from a novelty into a reliable

partner.

The 7-Step Prompt Formula:

1. Assign a ROLE

Tell the AI who

it should be. This sets expertise and tone.

Do: “You are a senior data scientist with 10 years of experience.”

Avoid: “Maybe act like an expert…”

2. Define the TASK

Be specific

about what you want. Ambiguity leads to generic answers.

Do: *“Create a 3-day Bangalore food itinerary for under ₹2000.”*

Avoid: “Tell me about food in Bangalore.”

3. Provide CONTEXT

Give background

that shapes the response.

Do: “The traveler is vegetarian, loves local dosa spots, and is visiting in

July.”

Explain how you

want the AI to think through the task.

Do: “First, list popular vegetarian eateries. Then, filter by budget and

proximity. Finally, explain your choices.”

5. Set RULES & Constraints

State what

should be avoided or included.

Do: “Exclude chain restaurants. Keep meals under ₹300 each. Include walking

distances.”

6. Specify STOP Conditions

Tell the AI

when to end.

Do: “Stop after listing 8 eateries with addresses and cost estimates.”

7. Choose the OUTPUT FORMAT

Define how the

result should be presented.

Do: “Provide a bulleted list, followed by a daily budget table in Markdown.”

Example:

From Basic to Powerful Prompt

Before:

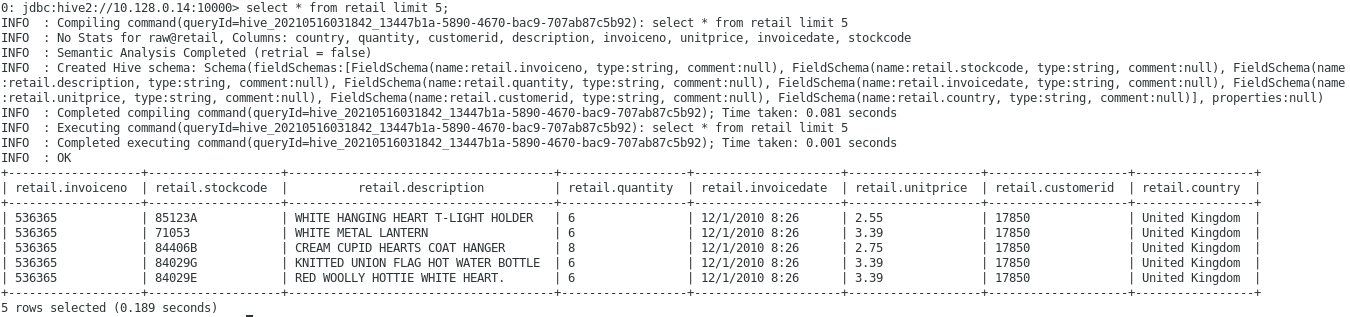

“How do I make

my SQL query faster?”

After:

You are a data engineer optimizing Apache Spark jobs.

TASK: Improve the performance of the SQL query below.

CONTEXT:

- Dataset size:

1TB

- Tables

involved: 3 large fact tables with date-based partitioning

- Current

runtime: 25 minutes

REASONING:

1. Analyze join

types and suggest alternatives.

2. Check for

data skew and recommend remedies.

3. Review

shuffle operations and partitioning strategies.

RULES:

- Avoid UDFs if

possible.

- Keep runtime

under 5 minutes.

- Use broadcast

joins where applicable.

STOP: After providing the optimized query and a summary of changes.

OUTPUT:

- Bullet-point

explanation of bottlenecks

- Revised query

in a code block

- Comparison

table of before/after runtime and resource usage

Try this structured approach—you’ll notice sharper, more actionable answers immediately.

Start Simple,

Then Experiment

You don’t need all 7 steps every time. Start with Role + Task + Format, then add layers as you refine. The key is to be clear and intentional.

💡 Try this today: Pick a task you’d normally Google, and

craft a prompt using at least 3 of the steps above. Compare the AI’s response

to what you usually get.

Conclusion

Prompt

engineering isn’t just a technical skill—it’s the new literacy for

collaborating with AI. In 2026 and beyond, those who can communicate clearly

with intelligent systems will save hours, boost creativity, and solve problems

faster.

Start small, practice consistently, and soon you’ll be guiding AI to produce exactly what you envision—or even beyond it.

Your turn: What’s the first prompt you’ll engineer? Share your before-and-after in the comments!

Happy prompting!